2025 was the year of the AI agents. Devin writes code autonomously. AutoGPT chains tasks without supervision. Every startup pitch includes "agentic workflows" somewhere in the deck.

The promise is seductive: describe what you want, the agent figures out how to build it. (Is that the whole pitch? Yes, outcomes over micromanagement.) No more micromanaging AI. Just outcomes.

I spent three weeks trying to build a feature entirely through agentic tools. (What kind of experiment is this? One where I try not to touch the steering wheel.) The experience was instructive. Not because it failed completely. Because it succeeded at all the wrong things. (Succeeded, but on whose terms? The task’s terms.)

What Agents Do Well

Agents excel at well-defined tasks with clear success criteria. (What counts as “clear success criteria” here? Things like “tests pass” or “the email sends.”) Write tests for this function. Deploy this code. Send this email when this condition is met.

The task decomposition is impressive. (Is decomposition the headline? It is a big part of the appeal.) Devin can genuinely break a coding task into subtasks, execute them, and course-correct when tests fail. Cognition Labs reports success rates above 80% on standard coding benchmarks. (Above 80%, for what kind of work? Standard coding benchmarks.)

For product development, this matters. Repetitive tasks can now be delegated. Boilerplate disappears. (Does that change the day-to-day? Yes, less busywork.)

But here is what agents cannot do: they cannot tell you whether the feature should exist in the first place. (Can they ask “should we”? No.)

The Product Thinking Gap

I asked an agent to "build a notification center for our SaaS product." (And did it hesitate? No, it just built.)

It built one. Technically competent. Clean code. Working notifications. (So what’s missing? The why.)

The notification center had no concept of our users. (Built for whom? Not for anyone in particular.) It did not know that our users are overwhelmed by notifications from other tools. It did not consider that we should probably show fewer notifications, not more. It did not surface the edge case where a user returns after vacation to find 200 unread items. (What happens then? Exactly that, 200 unread items.)

The agent optimized for the task: build a notification center. It had no ability to question whether the task was the right one. (Can it challenge the premise? Not really.)

This is the product thinking gap. Agents execute. They do not explore. (Execute vs explore? That’s the gap.)

Where Human Judgment Still Wins

Problem Definition. Is this the right problem to solve? (Right problem? Humans decide.) Agents assume the problem is given.

User Context. How do users actually behave? (Actually behave, not hypothetically? Yes.) Agents have no access to tacit knowledge PMs build through conversations.

Strategic Fit. Does this feature advance the product's positioning? (Positioning, too? Yes.) Agents cannot evaluate competitive dynamics.

Trade-off Navigation. What are we willing to sacrifice? (Sacrifice what, exactly? Time, scope, polish, or risk.) Every product decision involves trade-offs agents cannot see.

.png)

A 2024 MIT Sloan study found human-AI collaboration outperformed pure AI automation by 34% on complex, ambiguous problems. The advantage disappeared on well-defined tasks. (So when does it help? When the work is complex and ambiguous.)

Product problems are almost always complex and ambiguous. (So where does that leave us? Right here.)

The Right Division of Labor

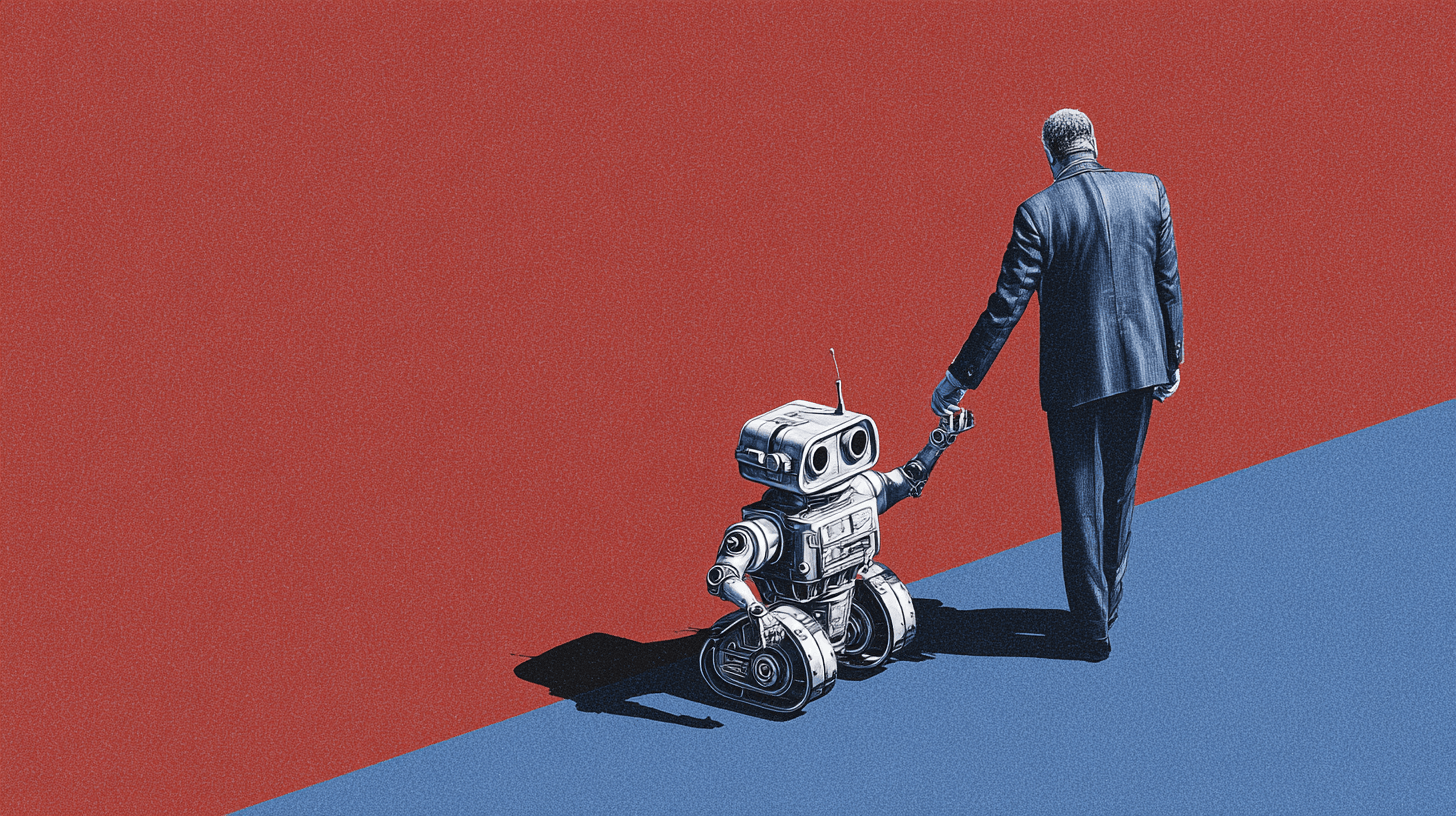

Agents should handle execution. Humans should handle judgment. (Who owns what? Agents execute, humans judge.)

This reflects something fundamental about what AI is good at versus what humans are good at. (Fundamental, or just practical? Both.)

Pattern intelligence surfaces edge cases and design patterns. But the PM decides which patterns apply. The AI explores alternatives. But the PM chooses direction. (Explores vs chooses? Exactly.)

The Basic Gist

The agentic revolution is real. Execution tasks are being automated at an accelerating pace. (Is this actually happening? Yes, it’s happening.)

But product thinking is not an execution task. It is a judgment task. The features you should build. The users you should serve. The trade-offs you should accept. (What’s the common thread? Judgment plus context.) These require human context no agent can access.

In Short

Use agents for execution. Use AI for exploration and pattern-matching. Keep product thinking where it belongs: with humans who understand users, strategy, and trade-offs. (So should PMs worry? No, they should recalibrate.)

The PM role is not threatened by agents. It is elevated. (Elevated how? Less execution, more judgment.)

→ Try Figr for AI that augments product thinking, not replaces it